John Chiverton, Majid Mirmehdi, Xianghua Xie

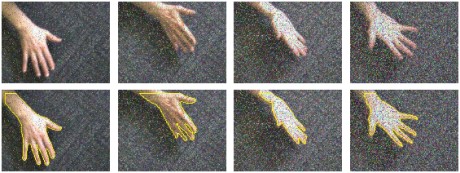

Tracking of objects and simultaneously identifying an accurate outline of the tracked object is a complicated computer vision problem to solve because of the  changing nature of the high-dimensional image information. Prior information is often included into models, such as probability distribution functions on a prior definition of shape to alleviate potential problems due to e.g. ambiguity as to what should actually be tracked in the image data. However supervised learning and or training is not always possible for new unseen objects or unforeseen configurations of shape, e.g. for silhouettes of 3-D objects. We are therefore interested and are currently investigating ways to include high-level shape information into active contour based tracking frameworks without a supervised pre-processing stage.

changing nature of the high-dimensional image information. Prior information is often included into models, such as probability distribution functions on a prior definition of shape to alleviate potential problems due to e.g. ambiguity as to what should actually be tracked in the image data. However supervised learning and or training is not always possible for new unseen objects or unforeseen configurations of shape, e.g. for silhouettes of 3-D objects. We are therefore interested and are currently investigating ways to include high-level shape information into active contour based tracking frameworks without a supervised pre-processing stage.