Object modelling from 3D and 4D sparse and misaligned data has important applications in medical imaging, where visualising and characterising the shape of, e.g., an organ or tumor, is often needed to establish a diagnosis or to plan surgery. Two common issues in medical imaging are the presence of large gaps between the 2D image slices which make a dataset, and misalignments between these slices, due to patient’s movements between their respective acquisitions. These gaps and misalignments make the automatic analysis of the data particularly challenging. In particular, they require interpolation and registration in order to recover a complete shape of the object. This work focuses on the integrated registration, segmentation and interpolation of such sparse and misaligned data. We developed a framework which is flexible enough to model objects of various shapes, from data having arbitrary spatial configuration and from a variety of imaging modalities (e.g. CT-scan, MRI).

ISISD: Integrated Segmentation and Interpolation of Sparse Data

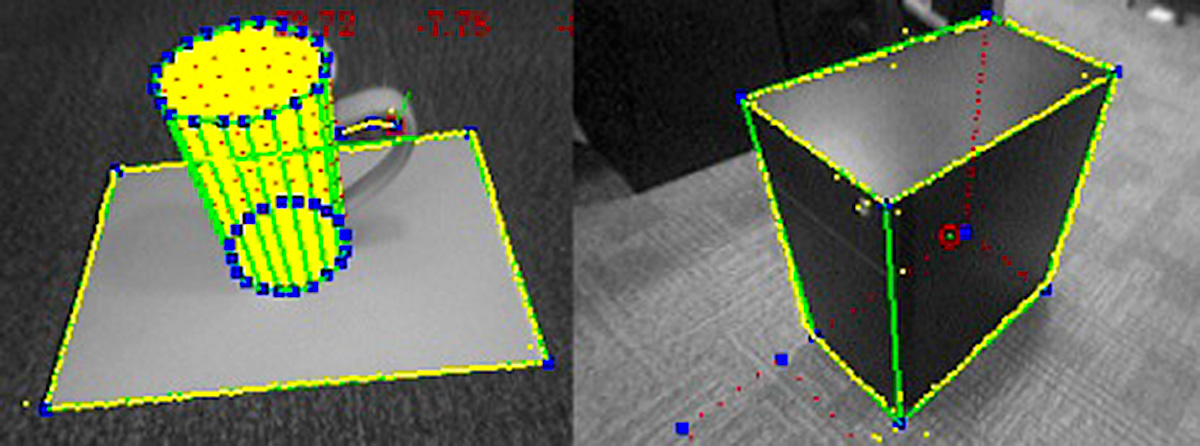

We present a new, general purpose, level set framework which can handle sparse data, by simultaneously segmenting the data and interpolating automatically its gaps. In this new framework, the level set implicit function is interpolated by Radial Basis Functions (RBFs), and its interface can propagate in a sparse volume, using data information where available, and RBF based interpolation of its speeds in the gaps. Any segmentation criteria may be used, thus allowing the framework to process any imaging modalities. Different modalities can be handled simultaneously due to the method interpolating the level set contour rather than the image intensities. This new level set framework benefits from a better robustness to noise in the images, and can segment sparse volumes by integrating the shape of the objects in the gaps.

More details and results may be found here.

The method is described in:

- Adeline Paiement, Majid Mirmehdi, Xianghua Xie, Mark Hamilton, Integrated Segmentation and Interpolation of Sparse Data. IEEE Transactions on Image Processing, Vol. 23, Issue 1, pp. 110-125, 2014.

IReSISD: Integrated Registration, Segmentation and Interpolation of Sparse Data

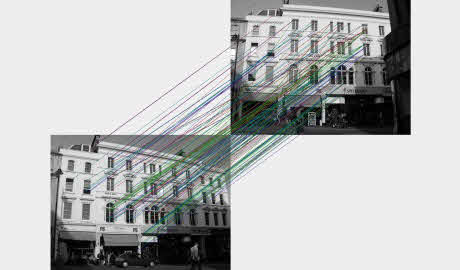

A new registration method,  also based on level set, has been developed and integrated to the previous RBF interpolated level set framework. Thus, the new framework can correct misalignments in the data, at the same time as it segments and interpolates it. The integration of all three processes of registration, segmentation and interpolation into a same framework allows them to benefit from each others. Notably registration exploits the shape information provided by the segmentation stage, in order to be robust to local minima and to limited intersections between the images of a dataset.

also based on level set, has been developed and integrated to the previous RBF interpolated level set framework. Thus, the new framework can correct misalignments in the data, at the same time as it segments and interpolates it. The integration of all three processes of registration, segmentation and interpolation into a same framework allows them to benefit from each others. Notably registration exploits the shape information provided by the segmentation stage, in order to be robust to local minima and to limited intersections between the images of a dataset.

More details and results may be found here.

The method is described in:

- Adeline Paiement, Majid Mirmehdi, Xianghua Xie, Mark Hamilton, Registration and Modeling from Spaced and Misaligned Image Volumes. Submitted to IEEE Transactions on Image Processing.

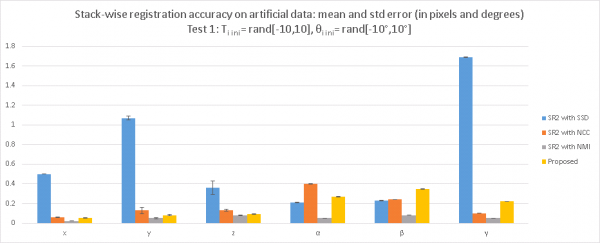

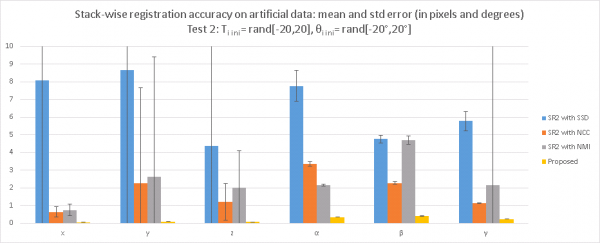

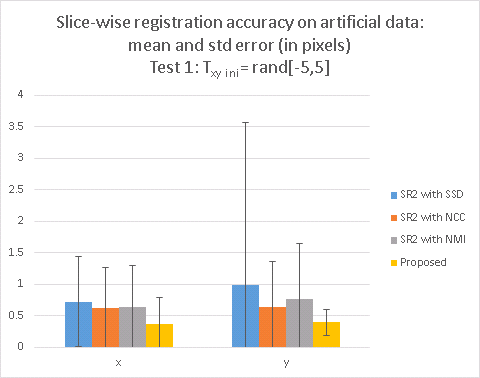

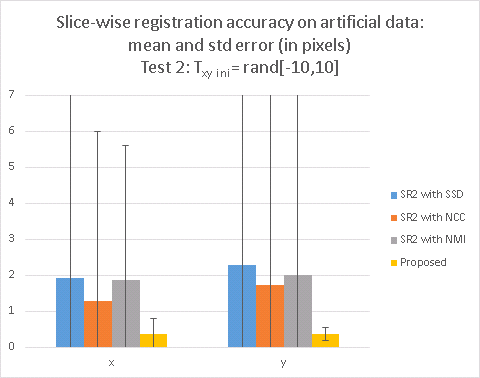

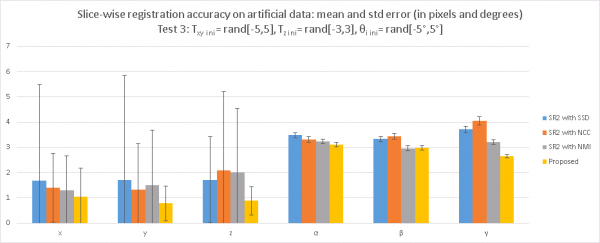

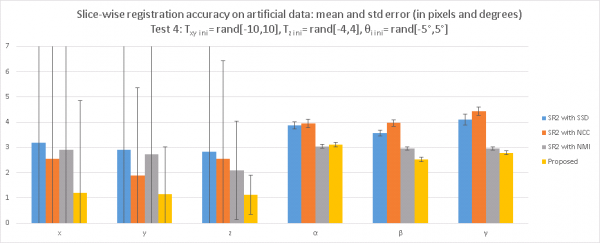

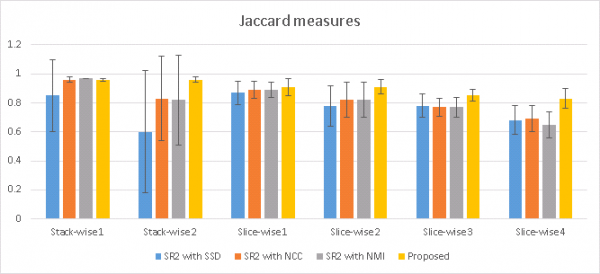

The tables in the article are reported in the graphs below:

Published Work

- Adeline Paiement, Majid Mirmehdi, Xianghua Xie, Mark Hamilton, Integrated Segmentation and Interpolation of Sparse Data. IEEE Transactions on Image Processing, Vol. 23, Issue 1, pp. 110-125, 2014.

- Adeline Paiement, Majid Mirmehdi, Xianghua Xie, Mark Hamilton, Simultaneous level set interpolation and segmentation of short- and long-axis MRI. Proceedings of Medical Image Understanding and Analysis (MIUA) 2010, pp. 267–272. July 2010. – PDF, 173 Kbytes.

Download Software

The latest version of the code for ISISD and IReSISD can be downloaded here (Version 1.3).

Earlier versions: