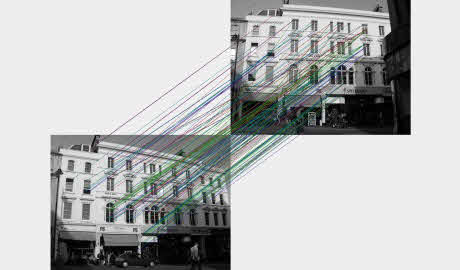

Visual place recognition methods which use image matching techniques have shown success in recent years, however their reliance on local features restricts their use to images which are visually similar and which overlap in viewpoint. We suggest that a semantic approach to the problem would provide a more meaningful relationship between views of a place and so allow recognition when views are disparate and database coverage is sparse. As initial work towards this goal we present a system which uses detected objects as the basic feature and demonstrate promising ability to recognise places from arbitrary viewpoints. We build a 2D place model of object positions and extract features which characterise a pair of models. We then use distributions learned from training examples to compute the probability that the pair depict the same place and also an estimate of the relative pose of the cameras. Results on a dataset of 40 urban locations show good recognition performance and pose estimation, even for highly disparate views.

Notable Results

To assess the performance of our system, we collected a dataset of 40 locations, each with between 2 and 4 images from widely different viewpoints. Since we are simply learning distributions over comparisons of places, not about the places themselves, we decided to train the system on a subset of the test dataset to maximise use of the data. To verify that the results were not biased, we tried repeatedly training the system on a random 50% subset of the dataset and running the test again. We found that the learned probability distributions were very similar each iteration, and that the recognition performance did not change by more than about 2%.

A place recognition experiment was then performed. Each image from the dataset was compared against every other image to compute the the posterior probability that the images depict the same place. The table below states the performance of our system under several conditions. The “grouped” score is simply the percentage of test images for which an image from the same place was chosen as the most likely match, simulating a place recognition scenario in which we have made a small number of previous observations of each place. It is interesting however to consider a harder case in which, for each test image, there is only a single matching image in the database. The “pairwise” score simulates this situation by removing all but one of the matching images for each test image.

We also observed that some discriminative ability of the system is provided by the different object classes – so a place with objects of class “sign” and “bollard” cannot possibly match with a place containing only “traffic light” objects. Whilst this is a legitimate place recognition scenario, we wanted to observe the discriminative ability of the features alone. Thus, we also tested the system on a “restricted class” subset of the dataset with 30 locations, all of which contained the same two object classes, meaning that almost every image was capable of valid object correspondences with every other image. Clearly this is a harder case, however the table shows that performance was still reasonable.

|

Grouped |

Pairwise |

| Restricted class dataset |

67.9% |

54.5% |

| Full dataset |

73.1% |

61.8% |

| GIST (Oliva and Torralba, 2001) |

19.2% |

21.4% |