Massimo Camplani, Sion Hannuna, Majid Mirmehdi, Dima Damen, Adeline Paiement, Lili Tao, Tilo Burghardt. Real-time RGB-D Tracking with Depth Scaling Kernelised Correlation Filters and Occlusion Handling. British Machine Vision Conference, September 2015.

S. Hannuna, M. Camplani, J. Hall, M. Mirmehdi, D. Damen, T. Burghardt, A. Paiement, L. Tao, DS-KCF: A real-time tracker for RGB-D data, Journal of Real-Time Image Processing (2016). Open Access Publication can be downloaded here DOI: 10.1007/s11554-016-0654-3

The recent surge in popularity of real-time RGB-D sensors has encouraged research into combining colour and depth data for tracking. The results from a few, recent works in RGB-D tracking have demonstrated that state-of-the-art RGB tracking algorithms can be outperformed by approaches that fuse colour and depth, for example [1, 3, 4, 5]. In this paper, we propose a real-time RGB-D tracker which we refer to as the Depth Scaling Kernelised Correlations Filters (DS-KCF). It is based on, and improves upon, the RGB Kernelised Correlation Filters tracker (KCF) from [2]. In this paper, we propose a real-time RGB-D tracker which we refer to as the Depth Scaling Kernelised Correlations Filters (DS-KCF). It is based on, and improves upon, the RGB Kernelised Correlation Filters tracker (KCF) from [2]. KCF is based on the use of the ‘kernel trick’ to extend correlation filters for very fast RGB tracking. The KCF tracker has important characteristics, in particular its ability to combine high accuracy and processing speed as demonstrated in [2, 6].

The proposed RGB-D single object tracker is able to exploit depth information to handle scale changes, occlusions, and shape changes. Despite the computational demands of the extra functionalities, we still achieve real-time performance rates of 35-43 fps in Matlab, and 187 fps in our C++ implementation. Our proposed method includes fast depth-based target object segmentation that enables, (i) efficient scale change handling within the KCF core functionality in the Fourier domain, (ii) the detection of occlusions by temporal analysis of the target’s depth distribution, and (iii) the estimation of a target’s change of shape through the temporal evolution of its segmented silhouette allows. Both the Matlab and C++ versions of our software are available in the public domain.

DS–KCF

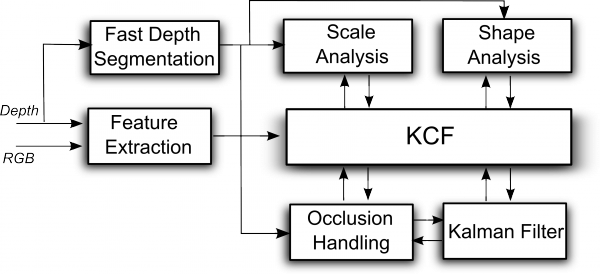

This paper contains an extension of the DS-KCF proposed in [7]. In this section we provide a detailed description of the core modules comprising DS-KCF, which extend the KCF tracker in a number of different ways. We integrate an efficient combination of colour and depth features in the KCF-tracking scheme. We provide a change of scale module, based on depth distribution analysis, that allows to efficiently modify the tracker’s model in the Fourier domain. Different to other works that deal with change of scale within the KCF framework, such as [2,3], our approach estimates the change of scale with minimal impact on real-time performance. We also introduce an occlusion handling module that is able to identify sudden changes in the target region’s depth histogram and to recover lost tracks. Finally, a change of shape module, based on the temporal evolution of the segmented target’s silhouette, is integrated into the framework. To improve the tracking performance during occlusions, we have added a simple Kalman filter motion model.

A detailed overview of the modules of the proposed tracker is shown in the figure below. Initially, depth data in the target region is segmented to extract relevant features for the target’s depth distribution. Then, modelled as a Gaussian distribution, changes in scale guide the update in the target’s model. At the same time, region depth distribution is deployed to enable the detection of possible occlusions. During an occlusion, the model is not updated and the occluding object is tracked to guide the target search space. Kalman filtering is used to predict the position of the target and the occluding object in order to improve the occlusion recovery strategy. Further, segmentation masks are accumulated over time and used to detect significant changes of shape of the object.

Block diagram of the proposed DS-KCF tracker

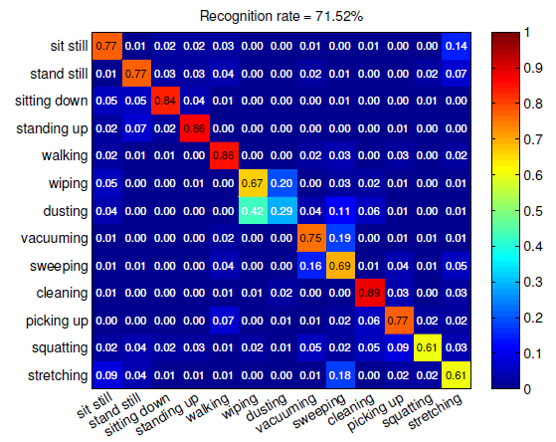

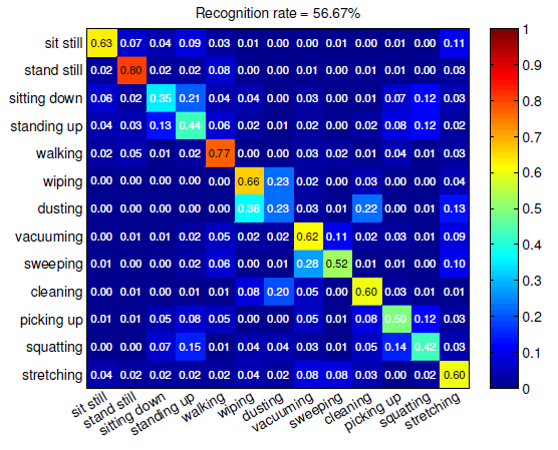

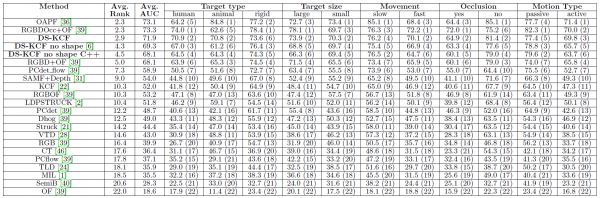

Results on Princeton Dataset [4] (validation set)

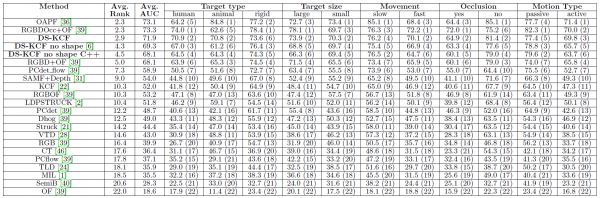

We compare tracking performance by reporting the precision value for an error threshold equal to 20 pixels (P20), the area under the curve (AUC) of success plot measure, and the number of processed frames per second (fps). Table 1 shows that the proposed DS-KCF tracker outperforms the baseline KCF leading to better results both in terms of AUC and P20 measures. DS-KCF also outperforms the other two RGB-D trackers tested, Prin-Track [4] and OAPF [3]. Furthermore, the average processing rate in the Prin-Track (RGB-D) is 0.14fps and 0.9fps for the OAPF tracker in striking contrast to 40fps for DS-KCF. Example results of the trackers are shown in the videos below. Results on the test set of the Princeton dataset can be found here

Trackers’ performance on Princeton Evaluation Dataset [4]

Overall, only two approaches, OAPF [5] and RGBDOcc+OF [4], obtain a higher Avg. Rank (by approximately 0.6% more) and a higher average AUC value (by approximately 1.4% more). However, as reported by their authors, these approaches achieve a very low processing rate of less than 1 fps. Our proposed method has an average processing rate ranging from 35 to 43 fps for its Matlab implementation and 187 fps for its C++ version.

Updated results can be found in the dataset webpage

Qualitative examples of the DS-KCF’s performance on Princeton Dataset [4] and BoBoT-D dataset [1] are shown below.

GITHUB DS–KCF code

The C++ and MATLAB version of the code are also available in GITHUB here. Code of the DSKCF tracker may be used on the condition of citing our paper “DS-KCF: A real-time tracker for RGB-D data. Journal of Real-Time Image Processing″ and the SPHERE project. The code is released under BSD license.

DSKCF C++ code (no shape Module)

The C++ code of the new version of the DSKCF tracker may be used on the condition of citing our paper “DS-KCF: A real-time tracker for RGB-D data. Journal of Real-Time Image Processing″ and the SPHERE project. The code is released under BSD license and it can be downloaded here. Please not this does not contain the shape-handling module

DSKCF Matlab code (with shape Module)

The Matlab code of the new version of the DSKCF tracker may be used on the condition of citing our paper “DS-KCF: A real-time tracker for RGB-D data. Journal of Real-Time Image Processing″ and the SPHERE project. The code is released under BSD license and it can be downloaded here

DSKCF Matlab code (BMVC VERSION)

The Matlab code of the DSKCF tracker can be downloaded here. It may be used on the condition of citing our paper “Real-time RGB-D Tracking with Depth Scaling Kernelised Correlation Filters and Occlusion Handling, BMVC2015″ and the SPHERE project. The code is released under BSD license and it can be downloaded here

RotTrack DATASET

The datset can be used for research purposes on the condition of citing our paper “DS-KCF: A real-time tracker for RGB-D data. Journal of Real-Time Image Processing″ and the SPHERE project. The dataset can be downloaded from the University of Bristol official repository data.bris

References

[1] G. García, D. Klein, J. Stückler, S. Frintrop, and A. Cremers. Adaptive multi-cue 3D tracking of arbitrary objects. In Pattern Recognition, pages 357–366. 2012.

[2] J. F. Henriques, R. Caseiro, P. Martins, and J. Batista. High-speed tracking with kernelized correlation filters. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 2015.

[3] T. Meshgi, S. Maeda, S. Oba, H. Skibbe, Y. Li, and S. Ishii. Occlusion aware particle filter tracker to handle complex and persistent occlusions. Computer Vision and Image Understanding, 2015 to appear.

[4] S. Song and J. Xiao. Tracking revisited using RGBD camera: Unified benchmark and baselines. In Computer Vision (ICCV), 2013 IEEE International Conference on, pages 233–240, 2013.

[5] Q. Wang, J. Fang, and Y. Yuan. Multi-cue based tracking. Neurocomputing, 131(0):227 – 236, 2014.

[6] Y. Wu, J. Lim, and M. Yang. Online Object Tracking: A Benchmark. In Computer Vision and Pattern Recognition (CVPR), 2013 IEEE Conference on, pages 2411–2418, 2013.

[7] Massimo Camplani, Sion Hannuna, Majid Mirmehdi, Dima Damen, Adeline Paiement, Lili Tao, Tilo Burghardt. Real-time RGB-D Tracking with Depth Scaling Kernelised Correlation Filters and Occlusion Handling.

[8] Danelljan M, Haager G, Shahbaz Khan F, Felsberg M (2014) Accurate Scale Estimation for Robust Visual Tracking. In: BMVC 2014

[9] Li Y, Zhu J (2015) A scale adaptive kernel correlation Filter tracker with feature integration. In: ECCV Workshops, vol 8926, pp 254-265.