A novel close-to-sensor computational camera has been designed and developed at the ViLab. ROIs can be captured and processed at 1000fps; the concurrent processing enables low latency sensor control and flexible image processing. With 9DoF motion sensing, the low, size, weight and power form-factor makes it ideally suited for robotics and UAV applications. The modular design allows multiple configurations and output options, easing development of embedded applications. General purpose output can directly interface with external devices such as servos and motors while ethernet offers a conventional image output capability. A binocular system can be configured with self-driven pan/tilt positioning, as an autonomous verging system or as a standard stereo pair. More information can be found here xcamflyer.

Tag: N.Campbell

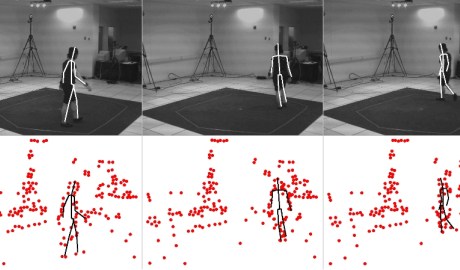

Human pose estimation using motion

Ben Daubney, David Gibson, Neill Campbell

Currently we are researching how to extract human pose from a sparse set of moving features. This work is inspired from psychophisical experiments using the Moving Light Display (MLD), where it has been shown that a small set of moving points attached to the key joints of a person could convey a wealth of information to an observer about the person being viewed, such as their mood or gender. Unlike the typical MLD’s used in the physchophysics community ours are automatically generated by applying a standard feature tracker to a sequence of images.

Moving Light Display (MLD), where it has been shown that a small set of moving points attached to the key joints of a person could convey a wealth of information to an observer about the person being viewed, such as their mood or gender. Unlike the typical MLD’s used in the physchophysics community ours are automatically generated by applying a standard feature tracker to a sequence of images.

The result is a set of features that are far more noisy and unreliable than those tradtionally used. The purpose of this research is to try to better understand how the temporal dimension of a sequence of images can be exploited at a much lower level than currently used to estimate pose.

Penguin Identification

Tilo Burghardt, Neill Campbell, Peter Barham, Richard Sherley

This early research was conducted between 2006-2009. The research aimed at exploring some first non-invasive identification solutions for problems in field biology and to better understand and help conserve endangered species. Specifically, we  developed approaches to monitor individuals in uniquely patterned animal populations using techniques that originated in computer vision and human biometrics. Work was centred around the African penguin (Spheniscus demersus).

developed approaches to monitor individuals in uniquely patterned animal populations using techniques that originated in computer vision and human biometrics. Work was centred around the African penguin (Spheniscus demersus).

During the project we provided a proof of concept for an autonomously operating prototype system capable of monitoring and recognising a group of individual African penguins in their natural environment without tagging or otherwise disturbing the animals. The prototype system was limited to very good acquisitional and environmental conditions, and operated on animals with sufficiently complex natural patterns.

Research was conducted together with the Animal Demography Unit at the University of Cape Town, South Africa. The project was funded by the Leverhulme Trust, with long-term support in the field from the Earthwatch Institute, and with pilot tests run in collaboration with Bristol Zoo Gardens.

Whilst deep learning approaches of today have replaced most of the traditional identification techniques of the 2000s, the practical and applicational insights gained in this project helped inform some of our current work on animal biometrics.

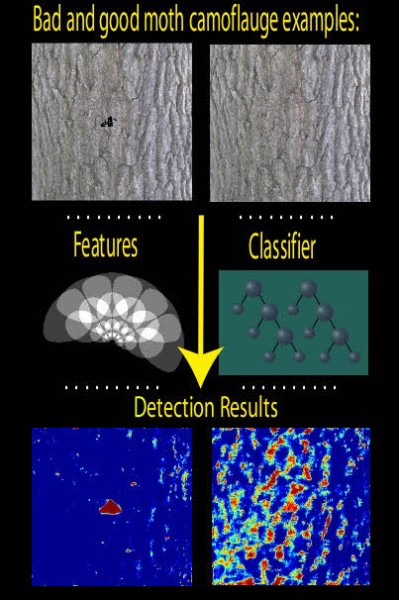

Analysis of moth camouflage

A half million pound BBSRC collaboration with Biological sciences and experimental Psychology, the aim of this project is to develop a computational theory of animal camouflage, with models specific to the visual systems of birds and humans. Moths have been chosen for this study as they are a particularly good demonstrators of a wide range of cryptic and disruptive camouflage in nature. Using psychophysically plausible low-level image features, learning algorithms are used to determine the effectiveness of camouflage examples. The ability to generate and process large numbers of camouflage examples enables predictive computational models to be created and compared to the performance of human and bird subjects. Such comparisons will give insights into what aspects of moth camouflage are important for avoiding detection and recognition by birds and humans and thereby, give insight into the mechanisms being employed by bird and human visual systems