The Visual Information laborartory group got together for their annual Summer BBQ last week in Brandon Hill Park, and enjoyed some rare sunshine!

The Visual Information laborartory group got together for their annual Summer BBQ last week in Brandon Hill Park, and enjoyed some rare sunshine!

The Picture Coding Symposium (PCS) is an international forum devoted to advances in visual data coding. Established in 1969, it has the longest history of any conference in this area. The 37th event in the series, PCS 2024, will convene in Taichung, Taiwan. Taichung is centrally located in the western half of Taiwan. It is known as an art and cultural hub of Taiwan, being home to many national museums, historic attractions, art installations, and design boutiques.

Aswell as Dave Bull, other Panelists include:

Prof. Dr.-Ing. Joern Ostermann, member of the Senat of Leibniz Universität Hannover.

Prof. C.-C. Jay Kuo. Ming Hsieh Chair in Electrical and Computer Engineering-Systems at the University of Southern California.

Dr. Yu-Wen Huang. Deputy director of the video coding research team in MediaTek.

We are pleased to announce that Professor Dave Bull has been invited to speak at the Westminster Policy Forum, Next Steps for the Western Gateway

Westminster Social Policy Forum & Policy Forum for Wales keynote seminar: Priorities for economic development and investment in the South Wales and Western England region will be taking place on the 21 June.

This event will evaluate the most recent advancements and future strategies for the South Wales and Western England area. It presents a platform to delve into crucial topics during a period of increased policy attention leading up to the General Election. Attendees will review the progress made thus far and set out priorities for the future to accomplish the goals of the region’s cross-border partnership. These goals include drawing in more private investments into Western England and South Wales, achieving sustainable economic growth, and enhancing coordination and cooperation among local authorities within the region.

Key focuses will include economic development and investment priorities in the South Wales and Western England area. The conference will also explore ways to advance innovation in the region and determine the next steps towards positioning the area as a frontrunner in achieving net zero goals and promoting low carbon energy. The agenda will address the necessary policies and the roles of key stakeholders going forward to facilitate increased financial investments in the region.

Dave Bull, Director of MyWorld will be speaking alongside Director of GW4‘s Joanna Jenkinson, on the theme of Supporting future regional growth, and will be discussing supporting career opportunities, employment and capabilities across the region, examining plans for the UK’s first pan-national Cyber Super Cluster, building upon the region’s digital innovation strengths in AI, 5G, computing and robotics and establishing a coordinated approach and long-term funding for skills development | strengthening

collaboration within the third sector.

South by Southwest (SXSW) is an annual conglomeration of parallel film, interactive media, and music festivals and conferences organized jointly that takes place in mid-March in Austin, Texas, United States.

This year, My World Delegates exhibited work as part of the Immersive futures lab in the festival. The SXSW showcase included live demos and prototypes with representatives from XR development programmes: MyWorld (UK) and Xn Québec (Canada)

The Lab at SXSW 2024 brought cutting-edge projects supported by MyWorld (UK) and Xn Québec (Canada), giving their creators a platform to put exciting prototypes into the hands of new audiences and tell the stories behind their work.

The MyWorld deligation included the following projects:

Studio 5

Game Concious Dialogue

Inside Mental Health

Lux Aeterna

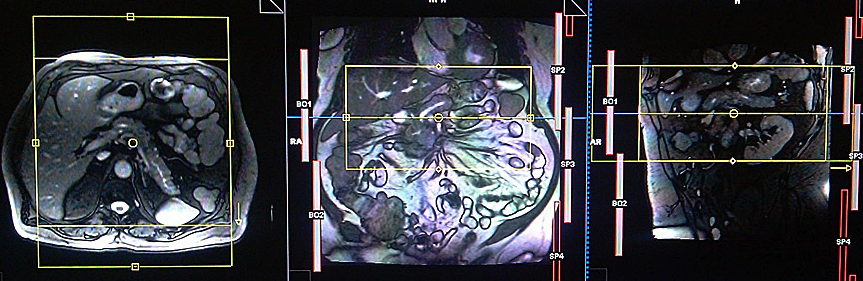

Current imaging tests provide inadequate sensitivity/specificity for detection of pre-malignant pancreatic cancer lesions because they are either too small (e.g. on MRI/CT) or isointense/isodense to normal tissue. This potentially leads to missed diagnoses on imaging in at-risk patients. This project gathers a team of clinical/non-clinical scientists to work on this challenge, more specifically, through the combination of two novel non-invasive approaches to increase the diagnostic yield of MRI in patients with early malignant disease: 1- Development of new multifunctional targeted nanoparticles for detection of pre-malignant pancreatic lesions, and 2- Improvement of MRI diagnostic yield through the application of super-resolution reconstruction (SRR) and quantitative MRI from MR Fingerprinting (MRF) for reproducible and rapid scanning.

Pancreatic cancer has the lowest survival of all common cancers, with five-year survival less than 7%, and the 5th biggest cancer killer in the UK [PCUK]. Early diagnosis is crucial to improve survival outcomes for people with pancreatic cancer; with one-year survival in those diagnosed at an early stage six times higher than one-year survival in those diagnosed at stage four. However, around 80% of patients are not diagnosed until the cancer is at an advanced stage. At this late stage, surgery/treatment is usually not possible. Not only do we need to have the tools and knowledge to diagnose people at an earlier stage, but we also need to make the diagnosis process faster so that we don’t waste any precious time in moving people onto potentially life-saving surgery or other treatments.

UCL Hospitals NHS Trust, universities of Strathclyde, Glasgow, Liverpool and Imperial College London.