Lili Tao, Tilo Burghardt, Sion Hannuna, Massimo Camplani, Adeline Paiement, Dima Damen, Majid Mirmehdi, Ian Craddock. A Comparative Home Activity Monitoring Study using Visual and Inertial Sensors, 17th International Conference on E-Health Networking, Application and Services (IEEE HealthCom), 2015

Monitoring actions at home can provide essential information for rehabilitation management. This paper presents a comparative study and a dataset for the fully automated, sample-accurate recognition of common home actions in the living room environment using commercial-grade, inexpensive inertial and visual sensors. We investigate the practical home-use of body-worn mobile phone inertial sensors together with an Asus Xmotion RGB-Depth camera to achieve monitoring of daily living scenarios. To test this setup against realistic data, we introduce the challenging SPHERE-H130 action dataset containing 130 sequences of 13 household actions recorded in a home environment. We report automatic recognition results at maximal temporal resolution, which indicate that a vision-based approach outperforms accelerometer provided by two phone-based inertial sensors by an average of 14.85% accuracy for home actions. Further, we report improved accuracy of a vision-based approach over accelerometry on particularly challenging actions as well as when generalising across subjects.

Data collection and processing

For visual data, we simultaneously collect RGB and depth images using the commercial product Asus Xmotion. Motion information can be recovered best from RGB data as it contains rich texture and colour information. Depth information, on the other hand, reveals details of the 3D configuration. We extract and encode both motion and depth features over the area of a bounding box as returned by the human detector and tracker provided by the OpenNI SDK[1]. For collecting inertial data, we opt for subjects to wear two accelerometers mounted at the centre of the waist and the dominant wrist.

The figure below gives an overview of the system.

Figure 1. Experimental setup.

SPHERE-H130 Action Dataset

We introduce the SPHERE-H130 action dataset for human action recognition from RGB-Depth and inertial sensor data captured in a real living environment. The dataset is generated over 10 sessions by 5 subjects containing 13 action categories per session: sit still, stand still, sitting down, standing up, walking, wiping table, dusting, vacuuming, sweeping floor, cleaning stain, picking up, squatting, upper body stretching. Overall, recordings correspond to about 70 minutes of total time captured. Actions were simultaneously captured by the Asus Xmotion RGB-depth camera and the two wireless accelerometers. Colourand depth images were acquired at a rate of 30Hz. The accelerometer data was captured at about 100Hz sampled down to 30Hz, a frequency recognised as optimal for human action recognition.

sitting standing sitting down standing up walking

wiping dusting vacumming sweeping cleaning stain

Picking squatting stretching

Figure 2. Sample videos from the dataset

Results

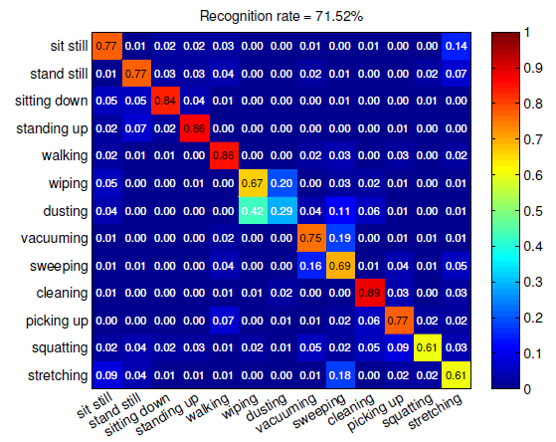

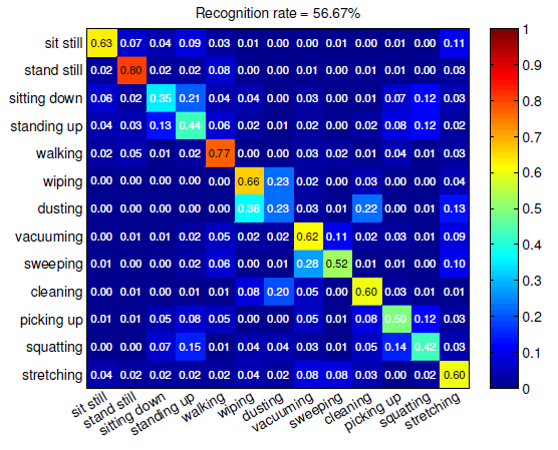

Vision can be more accurate than Accelerometers. Figure 3 depicts the recognition confusion matrices corresponding to the use of inertial and visual sensors, respectively.

Figure 3. The confusion matrices by (left) visual data and (right) accelerometer data.

Publication and Dataset

The dataset and the proposed method is presented in the following paper:

- Lili Tao, Tilo Burghardt, Sion Hannuna, Massimo Camplani, Adeline Paiement, Dima Damen, Majid Mirmehdi, Ian Craddock. A Comparative Home Activity Monitoring Study using Visual and Inertial Sensors, 17th International Conference on E-Health Networking, Application and Services (IEEE HealthCom), 2015

SPHERE-H130 action dataset can be downloaded here.

References

[1] OpenNI User Guide, OpenNI organization, November 2010. Available: http://www.openni.org/documentation