The evaluation of quality is a key component in many image and video processing systems. There use includes the benchmarking of compression algorithms, and the evaluation of denoising or other image enhancement systems. Quality assessment has been a focus of VI-Lab over many years, primarily linked to its work in video coding.

New approaches to perceptual quality assessment and measuring engagement: Assessing the perceptual quality of mediated visual content is both critical and challenging. Human visual perception is highly complex, influenced by many confounding factors, not fully understood and hence difficult to model. The perceptual distortion experienced by the human viewer cannot be fully characterized using simple mathematical differences (distortion). Hence there has been significant research into perception-based metrics that attempt to model aspects of human visual perception and thus offer enhanced correlation with subjective opinions. Work in VI-Lab has contributed state of the art, low complexity metrics including AVM and PVM and, in collaboration with Netflix, has helped to refine the increasingly popular VMAF metric.

Rate-Quality Optimisation: Video metrics are not just used for comparing different coding algorithms. They also play an important role in the picture compression and delivery process, for example enabling in-loop rate-quality optimization (RQO) or Dynamic Optimisation of content for adaptive internet streaming. More recently it is relevant to the evaluation of loss in machine learning systems.

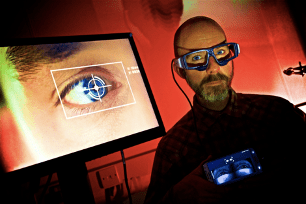

Subjective assessment and user experience: Because no perceptual metric is universally adopted or indeed reliable across all content types and distortions, the ultimate characterisation of algorithm performance has invariably been based on subjective assessments, where a group of viewers are asked their opinions on quality under a range of test conditions. VI-Lab has undertaken numerous comparisons of codec performance based on subjective comparisons and has also contributed to test methodologies through the production of open source tools and testing recommendations. Finally the group has worked with colleagues in Experimental Psychology to create a framework for the measurement of immersion.

Test datasets: Test databases are essential for evaluation of various image and video processing tools, in particular video compression and denoising. These must not only contain diverse content relevant to the design purpose, but also the metadata associated with it.

Please follow the links below for a detailed description of our research:

- Objective metrics for perceptual visual quality assessment

- Optimum presentation duration

- Assessing High frame rate video (BVI-HFR)

- Characterizing the spatiotemporal envelope of the human visual system

- Contrast Sensitivity functions

- Visual Saliency, attention and locomotion

- Rate Quality Assessment of standardized codecs

- BVI datasets [Homtex, BVI-HFR, BVI-HD, BVI-Syntex etc ]

- Measuring Audience and user experiences