Video production techniques are a key component in how we plan and acquire content and how we convert this raw content into a form ready for delivery and consumption. This are has boomed in recent years with advances in motion and volumetric capture, new more immersive video formats, and the emergence of virtual production methods. In particular, advances in deep learning are now having a major impact of production workflows. VI-Lab has contributed significant innovations in the optimization of production workflows, the creation of virtual production environments and in mitigating the influence of challenging acquisition environments.

AI-Enhanced Production Workflows: Huge potential for AI exists in the creative sector and VI-Lab researchers are contributing to the realisation of this potential in the context of enhanced production workflows and for modelling perceptual processes in video compression and quality metrics. VI-Lab also has world-leading expertise using these tools to measure and assess human activities, to recognize humans and animals, to detect anomalies, to see in the dark and to resample video data through frame interpolation. A particular challenge for many real world applications (e.g. colorisation, denoising, low light enhancement etc.), is that ground truth data does not exist. This demands specific architectural approaches and perceptually optimised loss functions. VI-Lab has also published the most comprehensive review of AI in the creative industries.

Mitigating Atmospheric Distortions: Atmospheric conditions can influence the visual quality or interpretability of video information during acquisition and subsequent processing. Distortions such as fog or haze reduce contrast, while atmospheric turbulence due to temperature variations or aerosols can cause interference patterns that lead to unsharp, waving images. VI-Lab has created state of the art methods for mitigating the effects of atmospheric distortion, particularly airborne turbulence which can severely degrade a region of interest (ROI). We refer to this algorithm as CLEAR (Complex waveLEt fusion for Atmospheric tuRbulence).

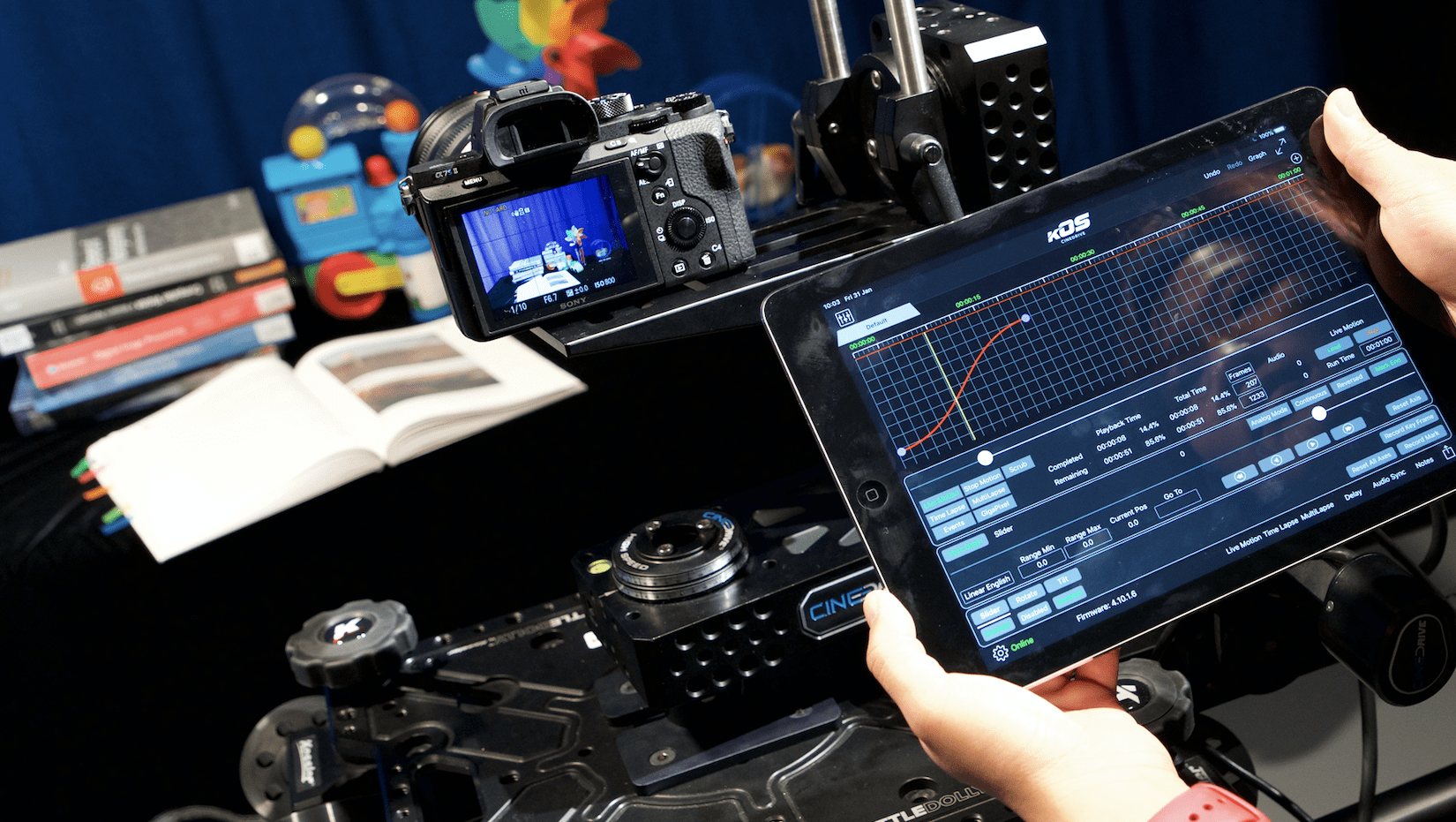

Cinematography and automation: Drones have been extensively deployed as platforms for extending cinematography, supporting innovation in shot creation in scenarios difficult to reach by other means. Particularly for autonomous drone systems, shot types and parameter defaults must be established prior to operation. VI-Lab research has quantitatively investigated the relationship between viewing experience and UAV/camera parameters, such as drone height and speed.

Virtual Production Environments: Virtual production allows organisations to do in pre-visualisation, what used to be done in post-production. In VI-Lab, we are addressing next generation visual production methods through the MyWorld Strength in Places Programme and our Bristol and Bath Creative R&D Cluster. Simulations of drone camera platforms based on photogrammetric representations of actual environments have been created to support shot previsualisation, planning, training and rehearsal for both single and multiple drone operations. This is particularly relevant for live events, where there is only one opportunity to get it right on the day and contributes to enhanced productivity, improved safety and ultimately enhanced quality of viewer experience.

Perceptually optimised image and Video Fusion: The effective fusion of two or more visual sources can provide significant benefits for visualisation, scene understanding, target recognition and situational awareness in multi-sensor applications. The output of a fusion process should retain as much perceptually important information as possible and should form a single more informative image (or video). However, the majority of fusion methods described in the literature do not employ perceptual models to decide what information is retained from each source. VI-Lab researchers have developed perceptual image fusion methods that employs explicit luminance and contrast masking models that exploit the perceptual importance of each input image.

Datasets: Dataset are essential for training and evaluating algorithms used in video production. In particular, real world data associated with colorisation, denoising and low light enhancement, frequently have no ground truth. Specialist data sets have therefore been developed for denoising by VI-Lab researchers.

Please follow the links below for a detailed description of our research:

- Drone Cinematography

- Virtual Production Environments for Drone Cinematography

- Image and video Denoising, Colorisation, Enhancement

- Mitigating Atmospheric Distortions

- Low light image enhancement

- Artificial Intelligence in the Creative Industries: A Review

- Fast depth estimation for view synthesis

- Bristol and Bath R+D Creative Industry Cluster

- MyWorld: UKRI Strength in Places Programme

- What’s on TV?

- Datasets for image denoising

- Perceptually optimised video fusion

- Intelligent frame Interpolation

- Denoising Imaging Polarimetry