Tilo Burghardt, Will Andrew, Jing Gao, Neill Campbell, Andrew Dowsey, S Hannuna, Colin Greatwood

Holstein Friesian cattle are the highest milk-yielding bovine type; they are economically important and especially prevalent within the UK. Identification and traceability of these cattle is not only required by exportand consumer demands, but in fact many countries have introduced legally mandatory frameworks.

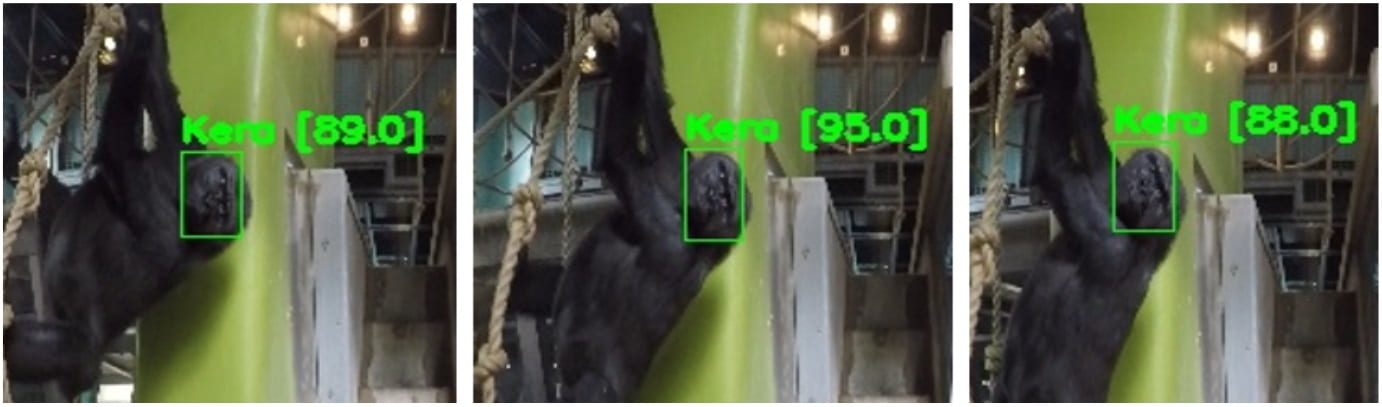

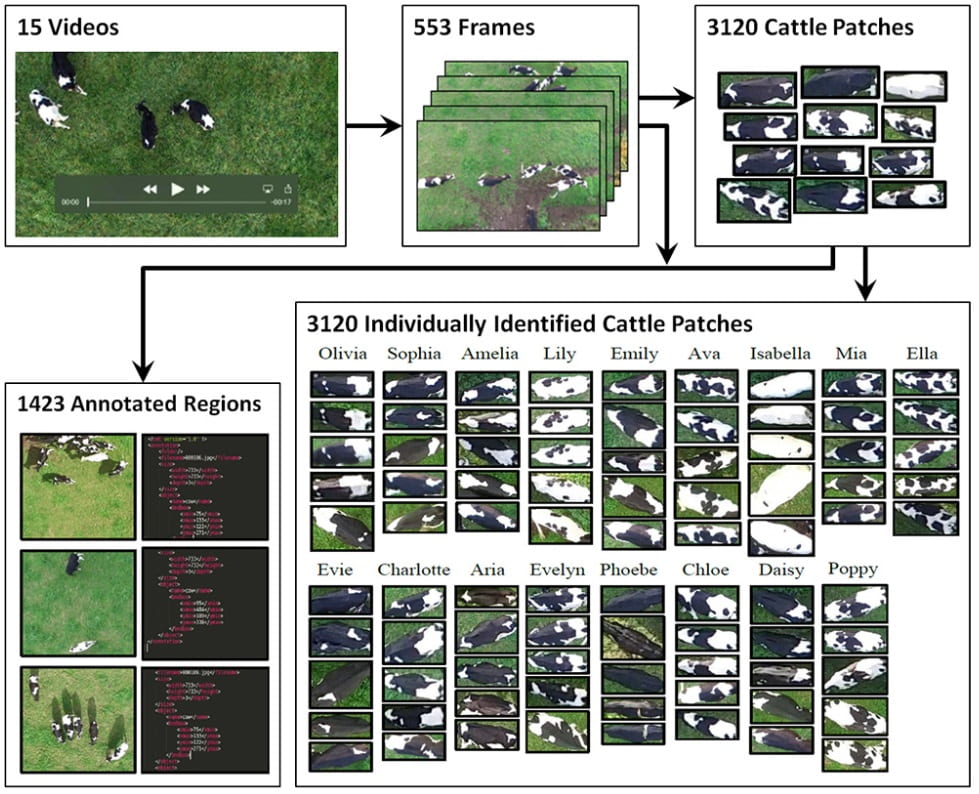

This line of work has shown that robust individual Holstein Friesian cattle identification can be implemented automatically and non-intrusively using computer vision pipelines fuelled by architectures utilising deep neural networks. In essence, the systems biometrically interpret the unique black-and-white coat markings to identify individual animals robustly; identification can for instance happen via fixed in-barn cameras or via drones in the field.

This work is being conducted with the Farscope CDT, VILab and BVS.

Related Publications

W Andrew, C Greatwood, T Burghardt. Aerial Animal Biometrics: Individual Friesian Cattle Recovery and Visual Identification via an Autonomous UAV with Onboard Deep Inference. 32nd IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 237-243, November 2019. (DOI:10.1109/IROS40897.2019.8968555), (Arxiv PDF), (CVF Extended Abstract at WACVW2020)

W Andrew, C Greatwood, T Burghardt. Deep Learning for Exploration and Recovery of Uncharted and Dynamic Targets from UAV-like Vision. 31st IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1124-1131, October 2018. (DOI:10.1109/IROS.2018.8593751), (IEEE Version), (Dataset GTRF2018), (Video Summary)

W Andrew, C Greatwood, T Burghardt. Visual Localisation and Individual Identification of Holstein Friesian Cattle via Deep Learning. Visual Wildlife Monitoring (VWM) Workshop at IEEE International Conference of Computer Vision (ICCVW), pp. 2850-2859, October 2017. (DOI:10.1109/ICCVW.2017.336), (Dataset FriesianCattle2017), (Dataset AerialCattle2017), (CVF Version)

W Andrew, S Hannuna, N Campbell, T Burghardt. Automatic Individual Holstein Friesian Cattle Identification via Selective Local Coat Pattern Matching in RGB-D Imagery. IEEE International Conference on Image Processing (ICIP), pp. 484-488, ISBN: 978-1-4673-9961-6, September 2016. (DOI:10.1109/ICIP.2016.7532404), (Dataset FriesianCattle2015)